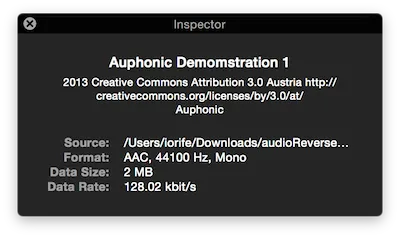

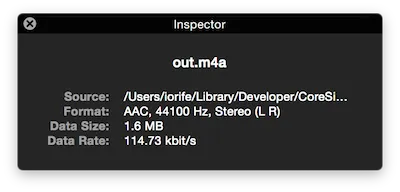

我正在尝试使用AVAsset和AVAssetWriter在iOS中反转音频。 以下代码有效,但输出文件比输入文件短。 例如,输入文件的持续时间为1:59,但输出文件的持续时间为1:50,且具有相同的音频内容。

- (void)reverse:(AVAsset *)asset

{

AVAssetReader* reader = [[AVAssetReader alloc] initWithAsset:asset error:nil];

AVAssetTrack* audioTrack = [[asset tracksWithMediaType:AVMediaTypeAudio] objectAtIndex:0];

NSMutableDictionary* audioReadSettings = [NSMutableDictionary dictionary];

[audioReadSettings setValue:[NSNumber numberWithInt:kAudioFormatLinearPCM]

forKey:AVFormatIDKey];

AVAssetReaderTrackOutput* readerOutput = [AVAssetReaderTrackOutput assetReaderTrackOutputWithTrack:audioTrack outputSettings:audioReadSettings];

[reader addOutput:readerOutput];

[reader startReading];

NSDictionary *outputSettings = [NSDictionary dictionaryWithObjectsAndKeys:

[NSNumber numberWithInt: kAudioFormatMPEG4AAC], AVFormatIDKey,

[NSNumber numberWithFloat:44100.0], AVSampleRateKey,

[NSNumber numberWithInt:2], AVNumberOfChannelsKey,

[NSNumber numberWithInt:128000], AVEncoderBitRateKey,

[NSData data], AVChannelLayoutKey,

nil];

AVAssetWriterInput *writerInput = [[AVAssetWriterInput alloc] initWithMediaType:AVMediaTypeAudio

outputSettings:outputSettings];

NSString *exportPath = [NSTemporaryDirectory() stringByAppendingPathComponent:@"out.m4a"];

NSURL *exportURL = [NSURL fileURLWithPath:exportPath];

NSError *writerError = nil;

AVAssetWriter *writer = [[AVAssetWriter alloc] initWithURL:exportURL

fileType:AVFileTypeAppleM4A

error:&writerError];

[writerInput setExpectsMediaDataInRealTime:NO];

[writer addInput:writerInput];

[writer startWriting];

[writer startSessionAtSourceTime:kCMTimeZero];

CMSampleBufferRef sample = [readerOutput copyNextSampleBuffer];

NSMutableArray *samples = [[NSMutableArray alloc] init];

while (sample != NULL) {

sample = [readerOutput copyNextSampleBuffer];

if (sample == NULL)

continue;

[samples addObject:(__bridge id)(sample)];

CFRelease(sample);

}

NSArray* reversedSamples = [[samples reverseObjectEnumerator] allObjects];

for (id reversedSample in reversedSamples) {

if (writerInput.readyForMoreMediaData) {

[writerInput appendSampleBuffer:(__bridge CMSampleBufferRef)(reversedSample)];

}

else {

[NSThread sleepForTimeInterval:0.05];

}

}

[writerInput markAsFinished];

dispatch_queue_t queue = dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_HIGH, 0);

dispatch_async(queue, ^{

[writer finishWriting];

});

}

更新:

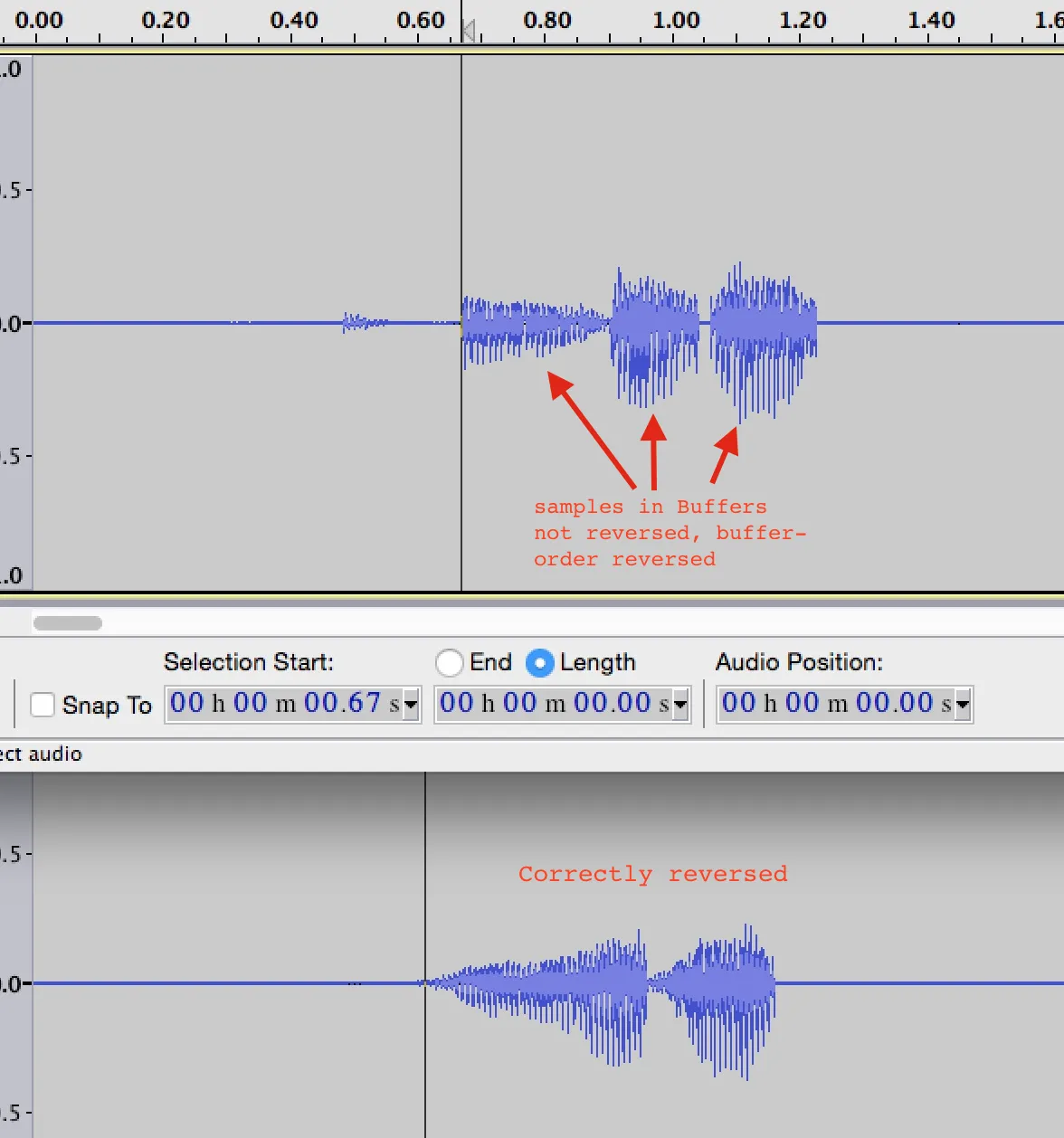

如果我在第一个while循环中直接写入样本,则一切正常(即使检查writerInput.readyForMoreMediaData也是如此)。在这种情况下,结果文件的持续时间与原始文件完全相同。但是,如果我从反转的NSArray中写入相同的样本,则结果会缩短。

如果您还颠倒了每个8192缓冲区的每个样本,则我认为您当前的方案可以起作用。 个人而言,我不建议使用NSArray枚举器进行信号处理,但如果您在样本级别操作,则可以使用它。

如果您还颠倒了每个8192缓冲区的每个样本,则我认为您当前的方案可以起作用。 个人而言,我不建议使用NSArray枚举器进行信号处理,但如果您在样本级别操作,则可以使用它。