*更新:我已经完全开发了一个脚本/模块,您可以在这里阅读它,并在这里下载代码。

我曾经遇到同样的问题,所以我开始开发一个PowerShell脚本来同步数据而不是覆盖它。

Write-s3Object CMDlet默认情况下会进行覆盖,我检查过没有选项可以指定不覆盖现有文件。

这是我如何检查本地文件夹是否存在于S3上:

if ((Get-S3Object -BucketName $BucketName -KeyPrefix $Destination -MaxKey 2).count -lt "2") {

这是我检查文件是否存在以及S3文件与本地文件大小是否相同的方法。

$fFile = Get-ChildItem -Force -File -Path "LocalFileName"

$S3file = Get-S3Object -BucketName "S3BucketName" -Key "S3FilePath"

$s3obj = ($S3file.key -split "/")[-1]

if ($fFile.name -eq $s3obj -and $S3file.size -ge $fFile.Length) {

WriteWarning "File exists: $s3obj"

}

这是完整的脚本。

Function Sync-ToS3Bucket {

[CmdletBinding()]

param (

[Parameter(Mandatory=$True,Position=1)]

[string]$BucketName,

[Parameter(Mandatory=$True,Position=2)]

[string]$LocalFolderPath,

[string]$S3DestinationFolder,

[string]$S3ProfileName,

[string]$AccessKey,

[string]$SecretKey,

[switch]$ShowProgress

)

Function WriteInfo ($msg) {

Write-Host "[$(get-date)]:: $msg"

}

Function WriteAction ($msg) {

Write-Host "[$(get-date)]:: $msg" -ForegroundColor Cyan

}

Function WriteWarning ($msg) {

Write-Host "[$(get-date)]:: $msg" -ForegroundColor Yellow

}

Function WriteError ($msg) {

Write-Host "[$(get-date)]:: $msg" -ForegroundColor Red

}

Function WriteLabel ($msg) {

"`n`n`n"

Write-Host ("*" * ("[$(get-date)]:: $msg").Length)

$msg

Write-Host( "*" * ("[$(get-date)]:: $msg").Length)

}

function Calculate-TransferSpeed ($size, $eTime) {

writeInfo "Total Data: $size bytes, Total Time: $eTime seconds"

if ($size -ge "1000000") {

WriteInfo ("Upload speed : " + [math]::round($($size / 1MB)/$eTime, 2) + " MB/Sec")

}

Elseif ($size -ge "1000" -and $size -lt "1000000" ) {

WriteInfo ("Upload speed : " + [math]::round($($size / 1kb)/$eTime,2)+ " KB/Sec")

}

Else {

if ($size -ne $null -and $size) {

WriteInfo ("Upload speed : " + [math]::round($ssize/$eTime,2) + " Bytes/Sec")

}

else {

WriteInfo ("Upload speed : 0 Bytes/Sec")

}

}

}

function Get-ItemSize ($size, $msg) {

if ($size -ge "1000000000") {

WriteInfo "Upload $msg Size : $([math]::round($($size /1gb),2)) GB"

}

Elseif ($size -ge "1000000" -and $size -lt "1000000000" ) {

WriteInfo "Upload $msg Size : $([math]::round($($size / 1MB),2)) MB"

}

Elseif ($size -ge "1000" -and $size -lt "1000000" ) {

WriteInfo "Upload $msg Size : $([math]::round($($size / 1kb),2)) KB"

}

Else {

if ($size -ne $null -and $size) {

WriteInfo "Upload $msg Size : $([string]$size) Bytes"

}

else {

WriteInfo "Upload $msg Size : 0 Bytes"

}

}

}

clear

"`n`n`n`n`n`n`n`n`n`n"

$OstartTime = get-date

if ($LocalFolderPath.Substring($LocalFolderPath.Length -1) -eq '\') {

$LocalFolderPath = $Localfolderpath.Substring(0,$Localfolderpath.Length -1)

}

if ($S3DestinationFolder.Substring($S3DestinationFolder.Length -1) -eq '\') {

$S3DestinationFolder = $S3DestinationFolder.Substring(0,$S3DestinationFolder.Length -1)

}

set-location $LocalFolderPath

$LocalFolderPath = $PWD.Path

Start-Transcript "AWS-S3Upload.log" -Append

"`n`n`n`n`n`n`n`n`n`n"

WriteLabel "Script start time: $OstartTime"

WriteAction "Getting sub directories"

$Folders = Get-ChildItem -Path $LocalFolderPath -Directory -Recurse -Force | select FullName

WriteAction "Getting list of all files"

$allFiles = Get-ChildItem -Path $LocalFolderPath -File -Recurse -Force | select FullName

WriteAction "Getting folder count"

$FoldersCount = $Folders.count

WriteAction "Getting file count"

$allFilesCount = $allFiles.count

$i = 0

foreach ($Folder in $Folders.fullname) {

$UploadFolder = $Folder.Substring($LocalFolderPath.length)

$Source = $Folder

$Destination = $S3DestinationFolder + $UploadFolder

if ($ShowProgress) {

$i++

$Percent = [math]::Round($($($i/$FoldersCount*100)))

Write-Progress -Activity "Processing folder: $i out of $FoldersCount" -Status "Overall Upload Progress: $Percent`% || Current Upload Folder Name: $UploadFolder" -PercentComplete $Percent

}

"`n`n"

"_" * $("[$(get-date)]:: Local Folder Name : $UploadFolder".Length)

WriteInfo "Local Folder Name : $UploadFolder"

WriteInfo "S3 Folder path : $Destination"

WriteAction "Getting folder size"

$Files = Get-ChildItem -Force -File -Path $Source | Measure-Object -sum Length

Get-ItemSize $Files.sum "Folder"

if ((Get-S3Object -BucketName $BucketName -KeyPrefix $Destination -MaxKey 2).count -lt "2") {

WriteAction "Folder does not exist"

WriteAction "Uploading all files"

WriteInfo ("Upload File Count : " + $files.count)

$startTime = get-date

WriteInfo "Upload Start Time : $startTime"

Write-S3Object -BucketName $BucketName -KeyPrefix $Destination -Folder $Source -Verbose -ConcurrentServiceRequest 100

$stopTime = get-date

WriteInfo "Upload Finished Time : $stopTime"

$elapsedTime = $stopTime - $StartTime

WriteInfo ("Time Elapsed : " + $elapsedTime.days + " Days, " + $elapsedTime.hours + " Hours, " + $elapsedTime.minutes + " Minutes, " + $elapsedTime.seconds+ " Seconds")

Calculate-TransferSpeed $files.Sum $elapsedTime.TotalSeconds

}

else {

WriteAction "Getting list of local files in local folder to transfer"

$fFiles = Get-ChildItem -Force -File -Path $Source

WriteAction "Counting files"

$fFilescount = $ffiles.count

WriteInfo "Upload File Count : $fFilescount"

$j=0

foreach ($fFile in $fFiles) {

if ($ShowProgress) {

$j++

$fPercent = [math]::Round($($($j/$fFilescount*100)))

Write-Progress -Activity "Processing File: $j out of $fFilescount" -Id 1 -Status "Current Progress: $fPercent`% || Processing File: $ffile" -PercentComplete $fPercent

}

$S3file = Get-S3Object -BucketName $BucketName -Key "$Destination\$ffile"

$s3obj = $S3file.key -replace "/","\"

if ("$S3DestinationFolder$UploadFolder\$ffile" -eq $s3obj -and $S3file.size -ge $ffile.Length) {

WriteWarning "File exists: $s3obj"

}

else {

WriteAction "Uploading file : $ffile"

Get-ItemSize $fFile.Length "File"

$startTime = get-date

WriteInfo "Upload Start Time : $startTime"

Write-S3Object -BucketName $BucketName -File $fFile.fullname -Key "$Destination\$fFile" -ConcurrentServiceRequest 100 -Verbose

$stopTime = get-date

WriteInfo "Upload Finished Time : $stopTime"

$elapsedTime = $stopTime - $StartTime

WriteInfo ("Time Elapsed : " + $elapsedTime.days + " Days, " + $elapsedTime.hours + " Hours, " + $elapsedTime.minutes + " Minutes, " + $elapsedTime.seconds+ " Seconds")

Calculate-TransferSpeed $fFile.Length $elapsedTime.TotalSeconds

break

}

}

}

}

$OstopTime = get-date

"Script Finished Time : $OstopTime"

$elapsedTime = $OstopTime - $OStartTime

"Time Elapsed : " + $elapsedTime.days + " Days, " + $elapsedTime.hours + " Hours, " + $elapsedTime.minutes + " Minutes, " + $elapsedTime.seconds+ " Seconds"

stop-transcript

}

在您的AWS Powershell实例中运行脚本,它将创建一个cmdlet或函数。您可以像这样使用它:

Sync-ToS3Bucket -BucketName 您的S3存储桶名称 -LocalFolderPath "C:\AmazonDrive\" -S3DestinationFolder 您的目标S3文件夹名称 -ShowProgress:$true

请确保使用相对路径。确保通过运行

Initialize-AWSDefaultConfiguration来初始化您的默认AWS配置。默认情况下,脚本不会显示进度,这有助于提高性能,但您可以使用开关

-showProgress:$true打开。脚本还将创建一个文件夹结构。您可以使用它将本地文件夹与S3同步。如果S3上不存在该文件夹,则脚本将上传整个文件夹。如果文件夹存在,则脚本将遍历本地文件夹中的每个文件,并确保其存在于S3上。

我仍在改进脚本,并将在我的GitHub个人资料上发布它。如果您有任何反馈,请告诉我。

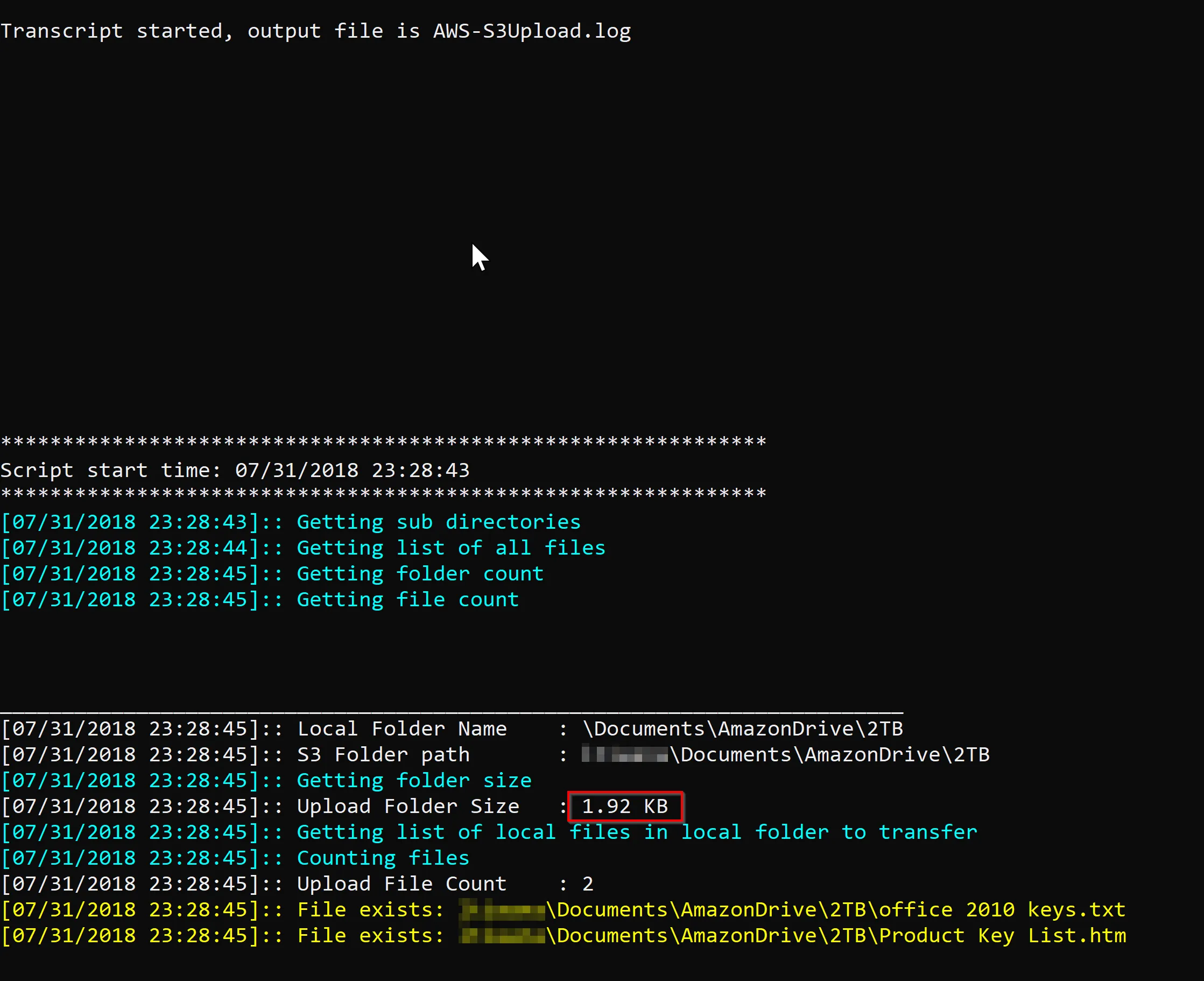

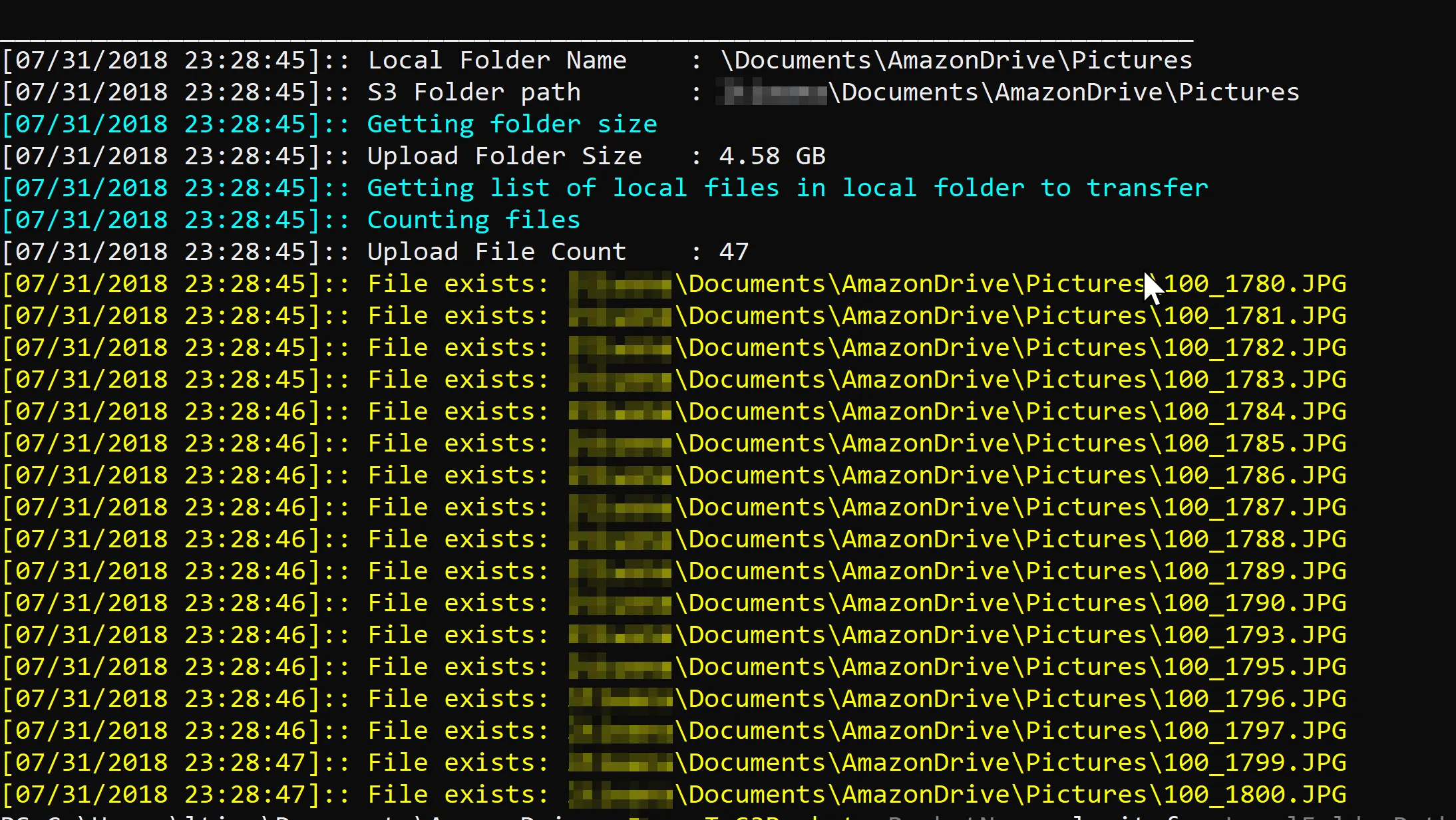

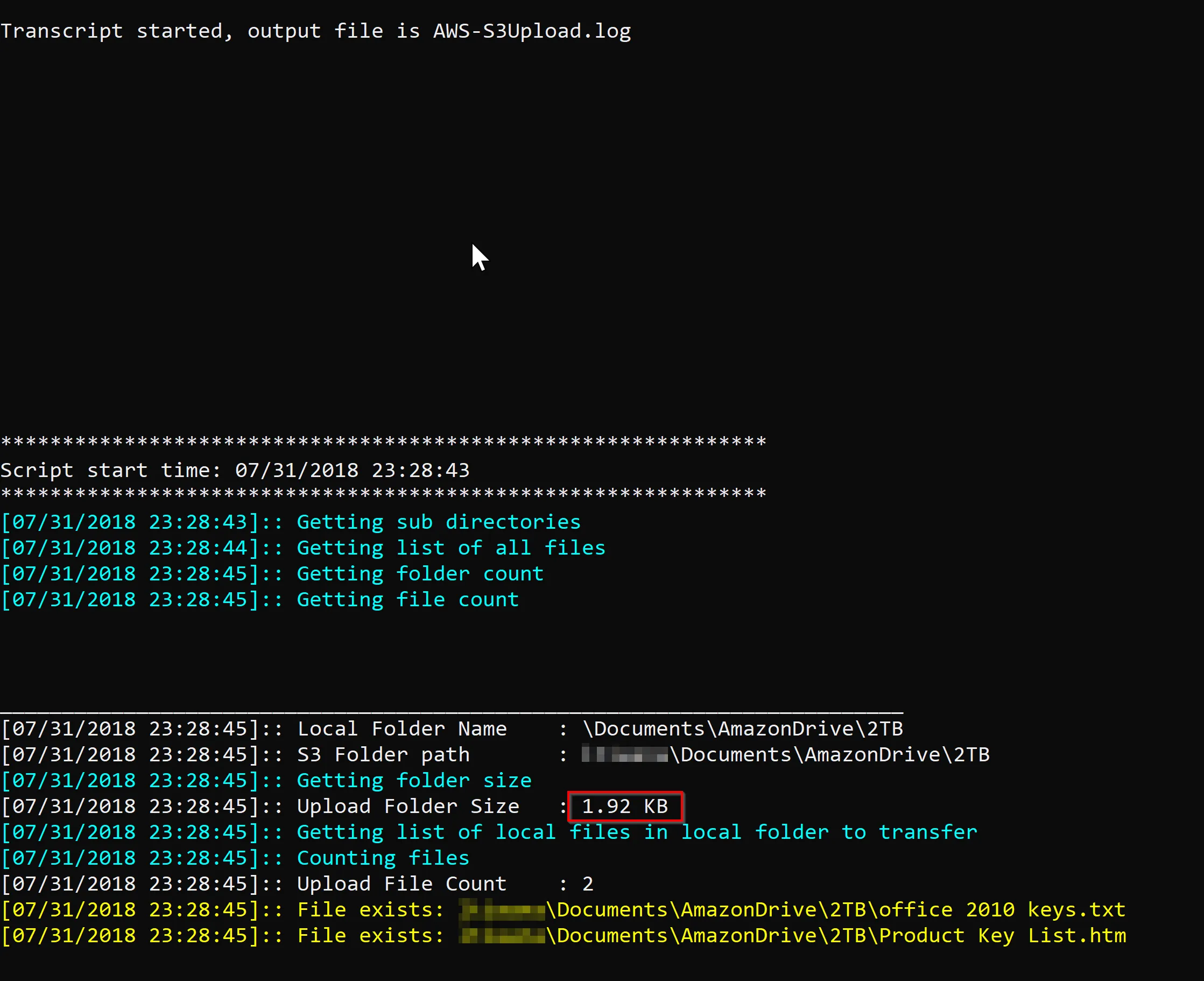

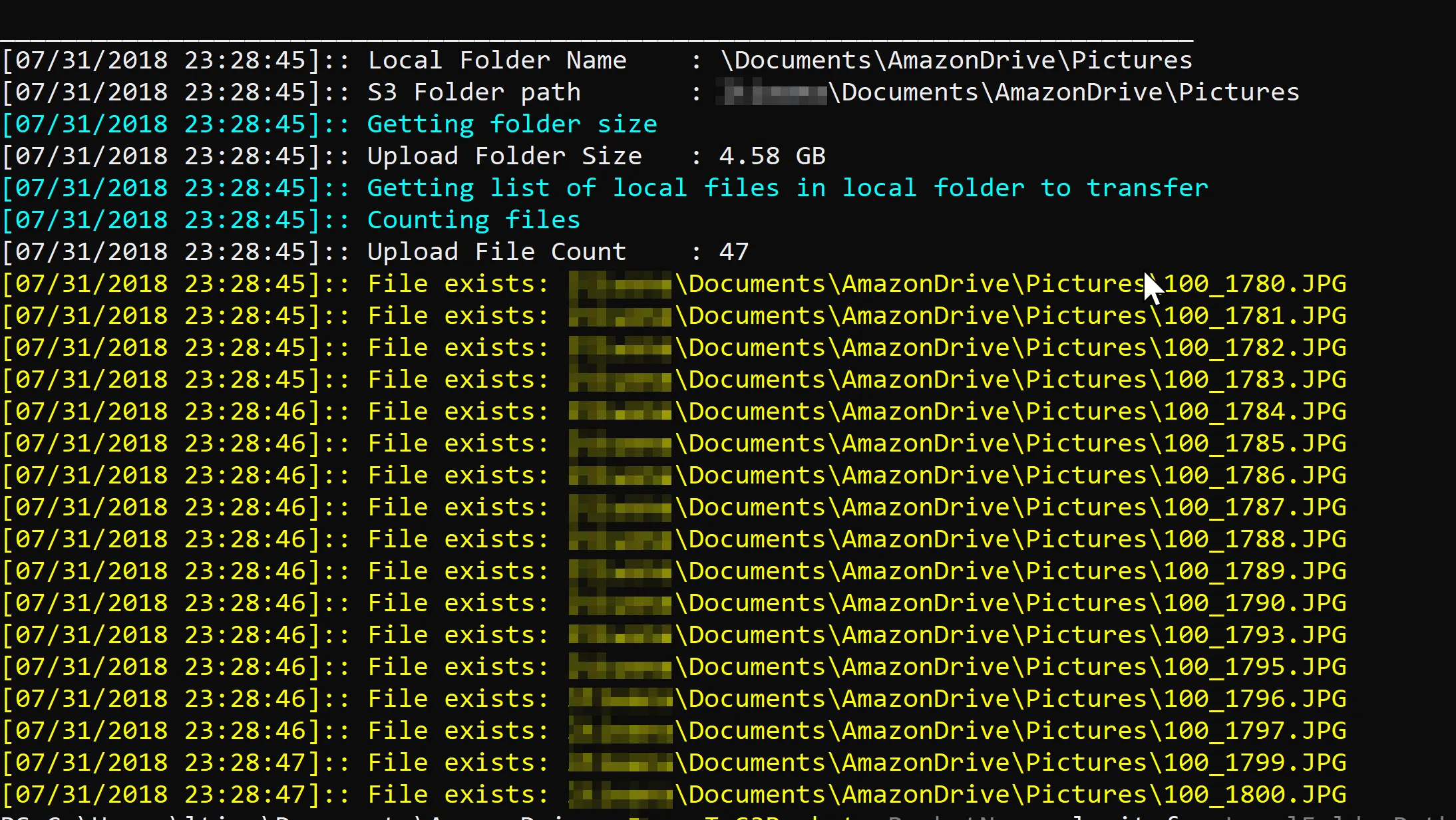

一些截图: