使用HTML5/Canvas/JavaScript来进行浏览器中的截屏

7

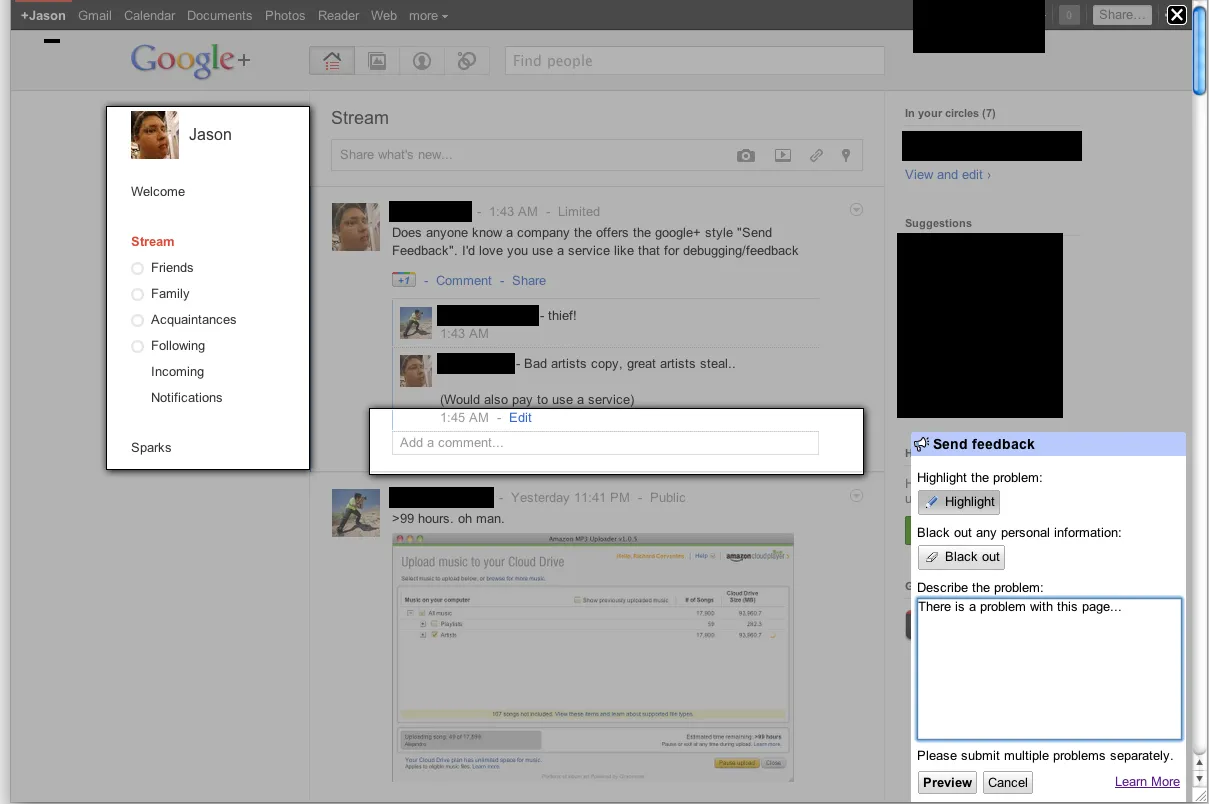

canvas呈现一个相当精确的表示。我一直在开发一个脚本,将HTML转换为画布图像。今天决定将其实现为发送反馈的方式,就像您描述的那样。该脚本允许您创建包括客户端浏览器上创建的屏幕截图以及表单的反馈表。屏幕截图基于DOM,因此可能不会与实际表示完全准确,因为它不会实际截屏,而是根据页面上可用的信息构建截屏。

它不需要从服务器渲染任何内容,因为整个图像是在客户端浏览器上创建的。HTML2Canvas脚本本身仍处于非常实验阶段,因为它没有解析我想要的CSS3属性的数量,也没有支持加载CORS图像(即使有代理也不行)。

浏览器兼容性仍然相当有限(不是因为不能支持更多,只是没有时间使其更加跨浏览器支持)。

有关更多信息,请查看这里的示例:

http://hertzen.com/experiments/jsfeedback/

编辑 现在html2canvas脚本可以单独获取这里,还有一些示例在这里。

编辑2 另一个证实谷歌使用非常相似方法的确认(事实上,根据文档,唯一的主要区别是他们遍历/绘制的异步方法)可以在Elliott Sprehn来自谷歌反馈团队的演示中找到: http://www.elliottsprehn.com/preso/fluentconf/

22

现在您的 Web 应用程序可以使用 getUserMedia() 来对客户端整个桌面进行“本地”截屏:

请看这个示例:

https://www.webrtc-experiment.com/Pluginfree-Screen-Sharing/

目前,客户端需要使用 Chrome 浏览器,并需要在 chrome://flags 下启用屏幕捕获支持。

7

Navigator.getUserMedia() 已经被弃用,但是在它下面有一行文字说:“请使用更新的navigator.mediaDevices.getUserMedia()”,也就是说,它刚刚被一个新的 API 取代了。请注意,这并不改变原来的意思。 - levant pied使用getDisplayMedia API将截图作为Canvas或Jpeg Blob / ArrayBuffer获取:

修复1:仅在Electron.js中使用带有chromeMediaSource的getUserMedia

修复2:抛出错误而不是返回null对象

修复3:修复演示以防止错误:必须从用户手势处理程序中调用getDisplayMedia

// docs: https://developer.mozilla.org/en-US/docs/Web/API/MediaDevices/getDisplayMedia

// see: https://www.webrtc-experiment.com/Pluginfree-Screen-Sharing/#20893521368186473

// see: https://github.com/muaz-khan/WebRTC-Experiment/blob/master/Pluginfree-Screen-Sharing/conference.js

function getDisplayMedia(options) {

if (navigator.mediaDevices && navigator.mediaDevices.getDisplayMedia) {

return navigator.mediaDevices.getDisplayMedia(options)

}

if (navigator.getDisplayMedia) {

return navigator.getDisplayMedia(options)

}

if (navigator.webkitGetDisplayMedia) {

return navigator.webkitGetDisplayMedia(options)

}

if (navigator.mozGetDisplayMedia) {

return navigator.mozGetDisplayMedia(options)

}

throw new Error('getDisplayMedia is not defined')

}

function getUserMedia(options) {

if (navigator.mediaDevices && navigator.mediaDevices.getUserMedia) {

return navigator.mediaDevices.getUserMedia(options)

}

if (navigator.getUserMedia) {

return navigator.getUserMedia(options)

}

if (navigator.webkitGetUserMedia) {

return navigator.webkitGetUserMedia(options)

}

if (navigator.mozGetUserMedia) {

return navigator.mozGetUserMedia(options)

}

throw new Error('getUserMedia is not defined')

}

async function takeScreenshotStream() {

// see: https://developer.mozilla.org/en-US/docs/Web/API/Window/screen

const width = screen.width * (window.devicePixelRatio || 1)

const height = screen.height * (window.devicePixelRatio || 1)

const errors = []

let stream

try {

stream = await getDisplayMedia({

audio: false,

// see: https://developer.mozilla.org/en-US/docs/Web/API/MediaStreamConstraints/video

video: {

width,

height,

frameRate: 1,

},

})

} catch (ex) {

errors.push(ex)

}

// for electron js

if (navigator.userAgent.indexOf('Electron') >= 0) {

try {

stream = await getUserMedia({

audio: false,

video: {

mandatory: {

chromeMediaSource: 'desktop',

// chromeMediaSourceId: source.id,

minWidth : width,

maxWidth : width,

minHeight : height,

maxHeight : height,

},

},

})

} catch (ex) {

errors.push(ex)

}

}

if (errors.length) {

console.debug(...errors)

if (!stream) {

throw errors[errors.length - 1]

}

}

return stream

}

async function takeScreenshotCanvas() {

const stream = await takeScreenshotStream()

// from: https://dev59.com/BW445IYBdhLWcg3wWI6L#57665309

const video = document.createElement('video')

const result = await new Promise((resolve, reject) => {

video.onloadedmetadata = () => {

video.play()

video.pause()

// from: https://github.com/kasprownik/electron-screencapture/blob/master/index.js

const canvas = document.createElement('canvas')

canvas.width = video.videoWidth

canvas.height = video.videoHeight

const context = canvas.getContext('2d')

// see: https://developer.mozilla.org/en-US/docs/Web/API/HTMLVideoElement

context.drawImage(video, 0, 0, video.videoWidth, video.videoHeight)

resolve(canvas)

}

video.srcObject = stream

})

stream.getTracks().forEach(function (track) {

track.stop()

})

if (result == null) {

throw new Error('Cannot take canvas screenshot')

}

return result

}

// from: https://dev59.com/hqXja4cB1Zd3GeqPMBz6#46182044

function getJpegBlob(canvas) {

return new Promise((resolve, reject) => {

// docs: https://developer.mozilla.org/en-US/docs/Web/API/HTMLCanvasElement/toBlob

canvas.toBlob(blob => resolve(blob), 'image/jpeg', 0.95)

})

}

async function getJpegBytes(canvas) {

const blob = await getJpegBlob(canvas)

return new Promise((resolve, reject) => {

const fileReader = new FileReader()

fileReader.addEventListener('loadend', function () {

if (this.error) {

reject(this.error)

return

}

resolve(this.result)

})

fileReader.readAsArrayBuffer(blob)

})

}

async function takeScreenshotJpegBlob() {

const canvas = await takeScreenshotCanvas()

return getJpegBlob(canvas)

}

async function takeScreenshotJpegBytes() {

const canvas = await takeScreenshotCanvas()

return getJpegBytes(canvas)

}

function blobToCanvas(blob, maxWidth, maxHeight) {

return new Promise((resolve, reject) => {

const img = new Image()

img.onload = function () {

const canvas = document.createElement('canvas')

const scale = Math.min(

1,

maxWidth ? maxWidth / img.width : 1,

maxHeight ? maxHeight / img.height : 1,

)

canvas.width = img.width * scale

canvas.height = img.height * scale

const ctx = canvas.getContext('2d')

ctx.drawImage(img, 0, 0, img.width, img.height, 0, 0, canvas.width, canvas.height)

resolve(canvas)

}

img.onerror = () => {

reject(new Error('Error load blob to Image'))

}

img.src = URL.createObjectURL(blob)

})

}

演示:

document.body.onclick = async () => {

// take the screenshot

var screenshotJpegBlob = await takeScreenshotJpegBlob()

// show preview with max size 300 x 300 px

var previewCanvas = await blobToCanvas(screenshotJpegBlob, 300, 300)

previewCanvas.style.position = 'fixed'

document.body.appendChild(previewCanvas)

// send it to the server

var formdata = new FormData()

formdata.append("screenshot", screenshotJpegBlob)

await fetch('https://your-web-site.com/', {

method: 'POST',

body: formdata,

'Content-Type' : "multipart/form-data",

})

}

// and click on the page

8

PoC

如Niklas提到的,你可以使用html2canvas库在浏览器中使用JS截取屏幕截图。我将通过提供使用此库进行截屏("概念验证")的示例来扩展他的答案:

function report() {

let region = document.querySelector("body"); // whole screen

html2canvas(region, {

onrendered: function(canvas) {

let pngUrl = canvas.toDataURL(); // png in dataURL format

let img = document.querySelector(".screen");

img.src = pngUrl;

// here you can allow user to set bug-region

// and send it with 'pngUrl' to server

},

});

}.container {

margin-top: 10px;

border: solid 1px black;

}<script src="https://cdnjs.cloudflare.com/ajax/libs/html2canvas/0.4.1/html2canvas.min.js"></script>

<div>Screenshot tester</div>

<button onclick="report()">Take screenshot</button>

<div class="container">

<img width="75%" class="screen">

</div>在onrendered函数的report()方法中,获取图像数据后,您可以向用户显示它,并允许他通过鼠标绘制“错误区域”,然后将屏幕截图和区域坐标发送到服务器。

在这个示例中,使用了async/await版本的makeScreenshot()函数,代码在这里。

更新

简单的示例允许您拍摄截屏,选择区域,描述错误并发送POST请求(此处 jsfiddle),其中主要函数是report()。

async function report() {

let screenshot = await makeScreenshot(); // png dataUrl

let img = q(".screen");

img.src = screenshot;

let c = q(".bug-container");

c.classList.remove('hide')

let box = await getBox();

c.classList.add('hide');

send(screenshot,box); // sed post request with bug image, region and description

alert('To see POST requset with image go to: chrome console > network tab');

}

// ----- Helper functions

let q = s => document.querySelector(s); // query selector helper

window.report = report; // bind report be visible in fiddle html

async function makeScreenshot(selector="body")

{

return new Promise((resolve, reject) => {

let node = document.querySelector(selector);

html2canvas(node, { onrendered: (canvas) => {

let pngUrl = canvas.toDataURL();

resolve(pngUrl);

}});

});

}

async function getBox(box) {

return new Promise((resolve, reject) => {

let b = q(".bug");

let r = q(".region");

let scr = q(".screen");

let send = q(".send");

let start=0;

let sx,sy,ex,ey=-1;

r.style.width=0;

r.style.height=0;

let drawBox= () => {

r.style.left = (ex > 0 ? sx : sx+ex ) +'px';

r.style.top = (ey > 0 ? sy : sy+ey) +'px';

r.style.width = Math.abs(ex) +'px';

r.style.height = Math.abs(ey) +'px';

}

//console.log({b,r, scr});

b.addEventListener("click", e=>{

if(start==0) {

sx=e.pageX;

sy=e.pageY;

ex=0;

ey=0;

drawBox();

}

start=(start+1)%3;

});

b.addEventListener("mousemove", e=>{

//console.log(e)

if(start==1) {

ex=e.pageX-sx;

ey=e.pageY-sy

drawBox();

}

});

send.addEventListener("click", e=>{

start=0;

let a=100/75 //zoom out img 75%

resolve({

x:Math.floor(((ex > 0 ? sx : sx+ex )-scr.offsetLeft)*a),

y:Math.floor(((ey > 0 ? sy : sy+ey )-b.offsetTop)*a),

width:Math.floor(Math.abs(ex)*a),

height:Math.floor(Math.abs(ex)*a),

desc: q('.bug-desc').value

});

});

});

}

function send(image,box) {

let formData = new FormData();

let req = new XMLHttpRequest();

formData.append("box", JSON.stringify(box));

formData.append("screenshot", image);

req.open("POST", '/upload/screenshot');

req.send(formData);

}.bug-container { background: rgb(255,0,0,0.1); margin-top:20px; text-align: center; }

.send { border-radius:5px; padding:10px; background: green; cursor: pointer; }

.region { position: absolute; background: rgba(255,0,0,0.4); }

.example { height: 100px; background: yellow; }

.bug { margin-top: 10px; cursor: crosshair; }

.hide { display: none; }

.screen { pointer-events: none }<script src="https://cdnjs.cloudflare.com/ajax/libs/html2canvas/0.4.1/html2canvas.min.js"></script>

<body>

<div>Screenshot tester</div>

<button onclick="report()">Report bug</button>

<div class="example">Lorem ipsum</div>

<div class="bug-container hide">

<div>Select bug region: click once - move mouse - click again</div>

<div class="bug">

<img width="75%" class="screen" >

<div class="region"></div>

</div>

<div>

<textarea class="bug-desc">Describe bug here...</textarea>

</div>

<div class="send">SEND BUG</div>

</div>

</body>5

Uncaught (in promise) Error: Element is not attached to a Document。 → 啊,找到解决方案了:https://dev59.com/v77pa4cB1Zd3GeqPqg7g - Avataronrendered不再存在于html2canvas 1.4.1中,因此不会被触发。 - Avatar这里是一个完整的截图示例,适用于2021年Chrome浏览器。最终结果是一个可以传输的blob。流程为:请求媒体 > 获取帧 > 绘制到画布 > 转换成 blob。如果您想要更高效地使用内存,请探索OffscreenCanvas或者ImageBitmapRenderingContext。

https://jsfiddle.net/v24hyd3q/1/

// Request media

navigator.mediaDevices.getDisplayMedia().then(stream =>

{

// Grab frame from stream

let track = stream.getVideoTracks()[0];

let capture = new ImageCapture(track);

capture.grabFrame().then(bitmap =>

{

// Stop sharing

track.stop();

// Draw the bitmap to canvas

canvas.width = bitmap.width;

canvas.height = bitmap.height;

canvas.getContext('2d').drawImage(bitmap, 0, 0);

// Grab blob from canvas

canvas.toBlob(blob => {

// Do things with blob here

console.log('output blob:', blob);

});

});

})

.catch(e => console.log(e));

1

<canvas id='canvas' />标签。 - Markus-Hermann这里有一个使用示例:getDisplayMedia

document.body.innerHTML = '<video style="width: 100%; height: 100%; border: 1px black solid;"/>';

navigator.mediaDevices.getDisplayMedia()

.then( mediaStream => {

const video = document.querySelector('video');

video.srcObject = mediaStream;

video.onloadedmetadata = e => {

video.play();

video.pause();

};

})

.catch( err => console.log(`${err.name}: ${err.message}`));

值得一提的是屏幕捕获API文档。

你可以尝试我的新的JS库:screenshot.js。

它可以拍摄真实的屏幕截图。

你可以加载这个脚本:

<script src="https://raw.githubusercontent.com/amiad/screenshot.js/master/screenshot.js"></script>

并且进行屏幕截图:

new Screenshot({success: img => {

// callback function

myimage = img;

}});

您可以在项目页面中阅读更多选项。

3

原文链接

Jason Small拍摄的屏幕截图,发布在一个

Jason Small拍摄的屏幕截图,发布在一个