我已经编写了一个示例,用于捕获电脑显示屏并转换为OpenCV Mat。

#include <iostream>

#include <opencv2/opencv.hpp>

#include <unistd.h>

#include <stdio.h>

#include <ApplicationServices/ApplicationServices.h>

using namespace std;

using namespace cv;

int main (int argc, char * const argv[])

{

size_t width = CGDisplayPixelsWide(CGMainDisplayID());

size_t height = CGDisplayPixelsHigh(CGMainDisplayID());

Mat im(cv::Size(width,height), CV_8UC4);

Mat bgrim(cv::Size(width,height), CV_8UC3);

Mat resizedim(cv::Size(width,height), CV_8UC3);

CGColorSpaceRef colorSpace = CGColorSpaceCreateDeviceRGB();

CGContextRef contextRef = CGBitmapContextCreate(

im.data, im.cols, im.rows,

8, im.step[0],

colorSpace, kCGImageAlphaPremultipliedLast|kCGBitmapByteOrderDefault);

while (true)

{

CGImageRef imageRef = CGDisplayCreateImage(CGMainDisplayID());

CGContextDrawImage(contextRef,

CGRectMake(0, 0, width, height),

imageRef);

cvtColor(im, bgrim, CV_RGBA2BGR);

resize(bgrim, resizedim,cv::Size(),0.5,0.5);

imshow("test", resizedim);

cvWaitKey(10);

CGImageRelease(imageRef);

}

return 0;

}

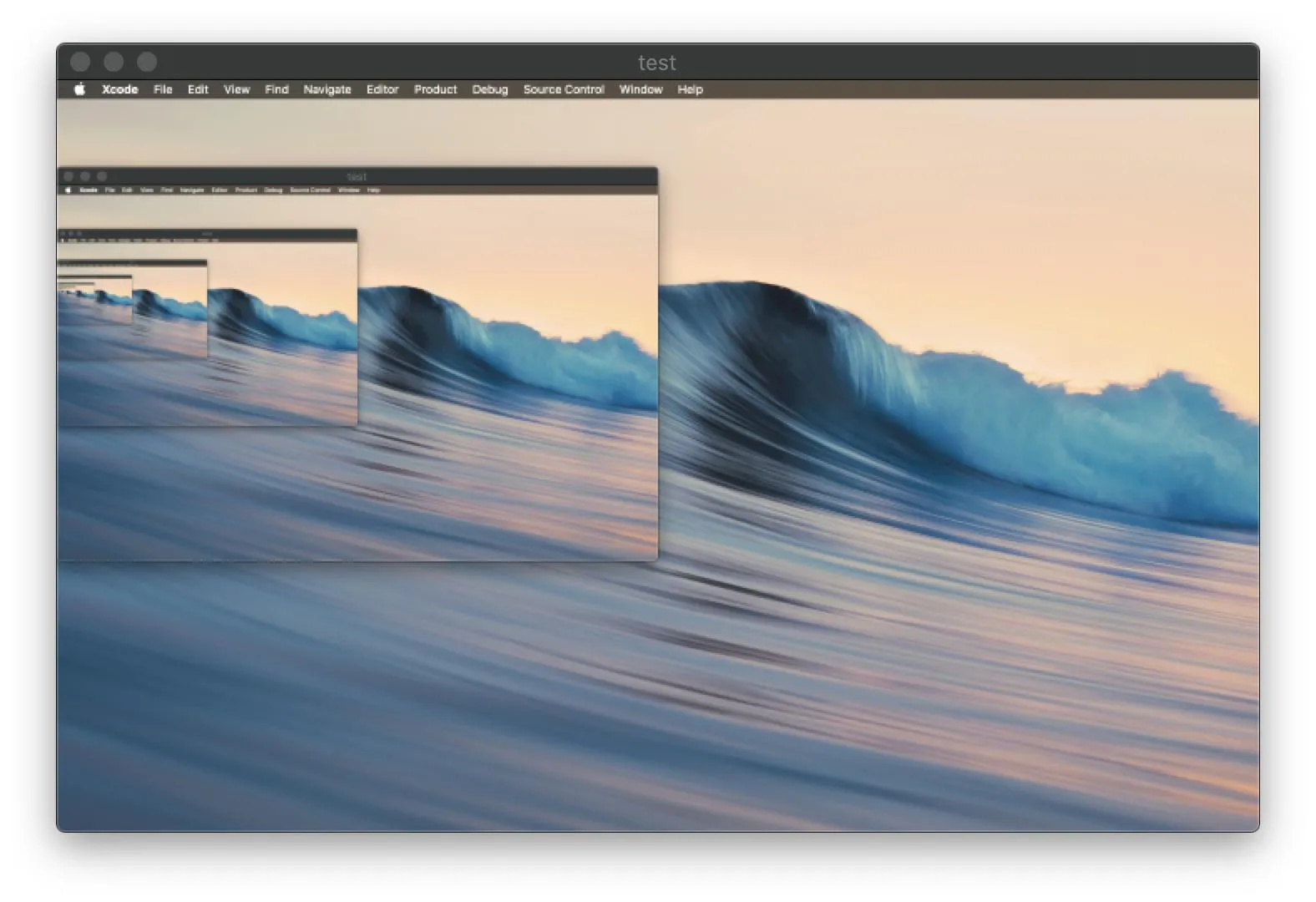

然后,结果就在这里了。

我本来以为会捕获我的当前显示屏幕,但实际上只捕获了背景墙纸。CGMainDisplayID()所指的可能就是这个问题的提示。

无论如何,我希望这可以稍微接近你的目标。