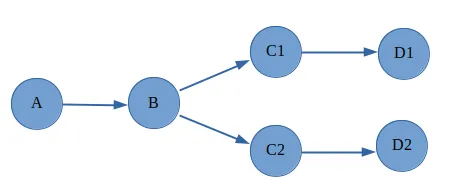

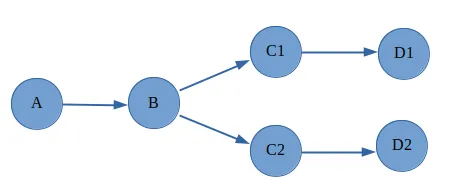

我已经在Airflow中运行了以下dag:

当执行以上dag时,它将按照以下顺序串行运行:

当执行以上dag时,它将按照以下顺序串行运行:

A -> B -> C1 -> C2 -> D1 -> D2

A -> B -> C2 -> C1 -> D2 -> D1

但我的要求是同时运行C1和C2任务。这是我airflow.cfg的一部分。

当执行以上dag时,它将按照以下顺序串行运行:

当执行以上dag时,它将按照以下顺序串行运行:A -> B -> C1 -> C2 -> D1 -> D2

A -> B -> C2 -> C1 -> D2 -> D1

但我的要求是同时运行C1和C2任务。这是我airflow.cfg的一部分。

# The executor class that airflow should use. Choices include

# SequentialExecutor, LocalExecutor, CeleryExecutor

executor = CeleryExecutor

#executor = LocalExecutor

# The amount of parallelism as a setting to the executor. This defines

# the max number of task instances that should run simultaneously

# on this airflow installation

parallelism = 32

# The number of task instances allowed to run concurrently by the scheduler

dag_concurrency = 16

# Number of workers to refresh at a time. When set to 0, worker refresh is

# disabled. When nonzero, airflow periodically refreshes webserver workers by

# bringing up new ones and killing old ones.

worker_refresh_batch_size = 1

# Number of seconds to wait before refreshing a batch of workers.

worker_refresh_interval = 30

# Secret key used to run your flask app

secret_key = temporary_key

# Number of workers to run the Gunicorn web server

workers = 4

[celery]

# This section only applies if you are using the CeleryExecutor in

# [core] section above

# The app name that will be used by celery

celery_app_name = airflow.executors.celery_executor

# The concurrency that will be used when starting workers with the

# "airflow worker" command. This defines the number of task instances that

# a worker will take, so size up your workers based on the resources on

# your worker box and the nature of your tasks

celeryd_concurrency = 16

# The scheduler can run multiple threads in parallel to schedule dags.

# This defines how many threads will run. However airflow will never

# use more threads than the amount of cpu cores available.

max_threads = 2

SequentialExecutor才会发生这种情况,但这明显不是你在 airflow.cfg 中设置的选项。你可以分享一下echo $AIRFLOW_HOME命令的输出吗? - Tameem