我有一个包含二进制列类型的DataFrame。

DataFrame:

我正在尝试阅读此列以提取几何格式。

从我的研究中,我发现了一个来自geospark库的函数ST_GeomFromWKB,它以长二进制作为参数。

所以我正在编写以下代码:

我得到了以下输出,但它不正确:

如何读取该列以获取正确的几何值?

编辑:

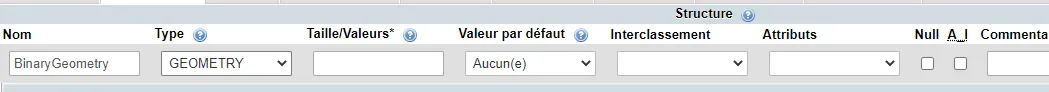

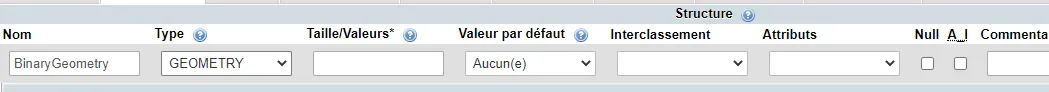

MySQL上的列结构 当我跟踪数据时,似乎是这样的:

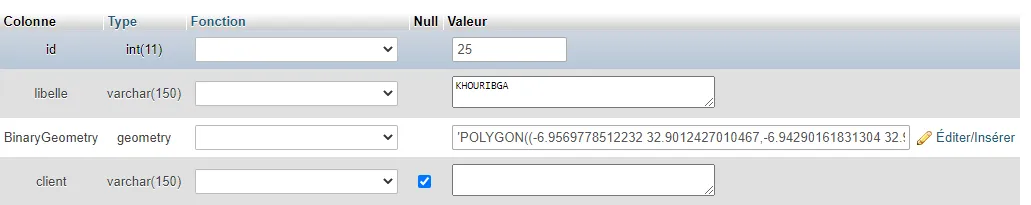

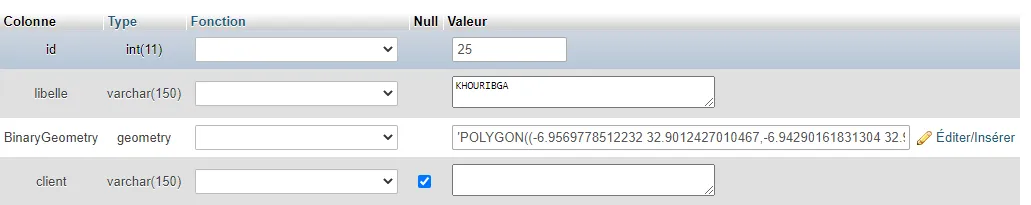

当我跟踪数据时,似乎是这样的:

当我点击

当我点击

文本文件包含以下数据:

编辑2:

我在MySQL数据库中有这张表。

当我在Spark中加载此表时,我得到以下内容:

然后要获取原始数据“Polygon .........”,我执行以下代码:

但我收到了以下错误信息:

编辑3

DataFrame:

+----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

|BinaryGeometry

+----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

|[00 00 00 00 01 03 00 00 00 01 00 00 00 11 00 00 00 04 00 F0 00 DC CC 1A C0 87 14 01 81 1E 1B 41 40 FC FF EF 00 68 AA 1A C0 BF EE 57 20 85 19 41 40 04 00 F0 00 8C 86 1A C0 CC DC 8B DC AE 1A 41 40 FF FF EF 00 44 74 1A C0 CA 9D 5D 61 10 1C 41 40 FF FF EF 00 64 63 1A C0 BF 1F 98 0B 3A 1D 41 40 FF FF EF 00 44 47 1A C0 E4 6B A0 DD CE 1D 41 40 FC FF EF 00 D8 2B 1A C0 54 E4 71 67 6D 1C 41 40 FF FF EF 00 44 1A 1A C0 BF 1F 98 0B 3A 1D 41 40 02 00 F0 00 80 0B 1A C0 0D 80 00 13 2F 23 41 40 02 00 F0 00 B0 35 1A C0 CC F6 23 F8 BD 26 41 40 04 00 F0 00 0C 43 1A C0 73 1A 44 AF 16 26 41 40 02 00 F0 00 40 5A 1A C0 FF 54 9C 7C 2D 27 41 40 02 00 F0 00 50 68 1A C0 87 6E B9 42 44 28 41 40 02 00 F0 00 00 7C 1A C0 78 2B 85 BA F5 26 41 40 FC FF EF 00 18 91 1A C0 49 96 6F 58 C6 28 41 40 02 00 F0 00 B0 BC 1A C0 91 FA 4B 0E 7F 20 41 40 04 00 F0 00 DC CC 1A C0 87 14 01 81 1E 1B 41 40] |

+----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

我正在尝试阅读此列以提取几何格式。

从我的研究中,我发现了一个来自geospark库的函数ST_GeomFromWKB,它以长二进制作为参数。

所以我正在编写以下代码:

df.withColumn("BinaryGeometry", hex(col("BinaryGeometry")))

.withColumn("BinaryGeometry",expr("ST_GeomFromWKB(BinaryGeometry)"))

我得到了以下输出,但它不正确:

POINT(0 0)

如何读取该列以获取正确的几何值?

编辑:

MySQL上的列结构

当我跟踪数据时,似乎是这样的:

当我跟踪数据时,似乎是这样的:

当我点击

当我点击 [GEOMETRY - 113 o]时

会下载一个文本文件。文本文件包含以下数据:

ے FPہ>Pے{ح@@€àOہZDچ4ح@@ہ¨KہإضآTح@@àمJہî=Oƒح@@û ڑLہCBsn«ح@@ے FPہ>Pے{ح@@

编辑2:

我在MySQL数据库中有这张表。

+----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|BinaryGeometry

+----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|'POLYGON((-6.70005799736828 34.2118684058451,-6.66641236748546 34.1993751935347,-6.63139344658703 34.208461349766,-6.6135406633839 34.2192498881446,-6.5970611711964 34.2283339016717,-6.5695953508839 34.2328755410488,-6.54281617607921 34.2220887476075,-6.5256500383839 34.2283339016717,-6.51123048271984 34.2748740913813,-6.55242921318859 34.3026724029165,-6.56547547783703 34.2975672800575,-6.58813477959484 34.3060756457653,-6.60186768975109 34.314583149474,-6.62109376396984 34.3043740415805,-6.64169312920421 34.318553022848,-6.68426515068859 34.2538774367323,-6.70005799736828 34.2118684058451))',0

+-----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

当我在Spark中加载此表时,我得到以下内容:

+----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

|BinaryGeometry

+----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

|[00 00 00 00 01 03 00 00 00 01 00 00 00 11 00 00 00 04 00 F0 00 DC CC 1A C0 87 14 01 81 1E 1B 41 40 FC FF EF 00 68 AA 1A C0 BF EE 57 20 85 19 41 40 04 00 F0 00 8C 86 1A C0 CC DC 8B DC AE 1A 41 40 FF FF EF 00 44 74 1A C0 CA 9D 5D 61 10 1C 41 40 FF FF EF 00 64 63 1A C0 BF 1F 98 0B 3A 1D 41 40 FF FF EF 00 44 47 1A C0 E4 6B A0 DD CE 1D 41 40 FC FF EF 00 D8 2B 1A C0 54 E4 71 67 6D 1C 41 40 FF FF EF 00 44 1A 1A C0 BF 1F 98 0B 3A 1D 41 40 02 00 F0 00 80 0B 1A C0 0D 80 00 13 2F 23 41 40 02 00 F0 00 B0 35 1A C0 CC F6 23 F8 BD 26 41 40 04 00 F0 00 0C 43 1A C0 73 1A 44 AF 16 26 41 40 02 00 F0 00 40 5A 1A C0 FF 54 9C 7C 2D 27 41 40 02 00 F0 00 50 68 1A C0 87 6E B9 42 44 28 41 40 02 00 F0 00 00 7C 1A C0 78 2B 85 BA F5 26 41 40 FC FF EF 00 18 91 1A C0 49 96 6F 58 C6 28 41 40 02 00 F0 00 B0 BC 1A C0 91 FA 4B 0E 7F 20 41 40 04 00 F0 00 DC CC 1A C0 87 14 01 81 1E 1B 41 40] |

+----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------+

然后要获取原始数据“Polygon .........”,我执行以下代码:

df.withColumn("geom",expr("ST_GeomFromWKB(BinaryGeometry)"));

但我收到了以下错误信息:

20/08/10 22:28:50 ERROR Executor: Exception in task 87.0 in stage 39.0 (TID 929) java.lang.ClassCastException: [B cannot be cast to org.apache.spark.unsafe.types.UTF8String

at org.apache.spark.sql.geosparksql.expressions.ST_GeomFromWKB.eval(Constructors.scala:174)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$SpecificUnsafeProjection.writeFields_0_39$(Unknown Source)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$SpecificUnsafeProjection.apply(Unknown Source)

at org.apache.spark.sql.catalyst.expressions.GeneratedClass$SpecificUnsafeProjection.apply(Unknown Source)

编辑3

ST_AsText(column)。 - Hedrack