如果没有看到您的代码,导致性能不佳的最有可能原因是垃圾回收活动。为了证明这一点,我编写了以下程序:

import java.lang.management.ManagementFactory;

import java.util.*;

import java.util.concurrent.*;

public class TestMap {

static final int NB_ENTITIES = 20_000_000, NB_TASKS = 2;

static Map<String, String> map = new ConcurrentHashMap<>();

public static void main(String[] args) {

try {

System.out.printf("running with nb entities = %,d, nb tasks = %,d, VM args = %s%n", NB_ENTITIES, NB_TASKS, ManagementFactory.getRuntimeMXBean().getInputArguments());

ExecutorService executor = Executors.newFixedThreadPool(NB_TASKS);

int entitiesPerTask = NB_ENTITIES / NB_TASKS;

List<Future<?>> futures = new ArrayList<>(NB_TASKS);

long startTime = System.nanoTime();

for (int i=0; i<NB_TASKS; i++) {

MyTask task = new MyTask(i * entitiesPerTask, (i + 1) * entitiesPerTask - 1);

futures.add(executor.submit(task));

}

for (Future<?> f: futures) {

f.get();

}

long elapsed = System.nanoTime() - startTime;

executor.shutdownNow();

System.gc();

Runtime rt = Runtime.getRuntime();

long usedMemory = rt.maxMemory() - rt.freeMemory();

System.out.printf("processing completed in %,d ms, usedMemory after GC = %,d bytes%n", elapsed/1_000_000L, usedMemory);

} catch (Exception e) {

e.printStackTrace();

}

}

static class MyTask implements Runnable {

private final int startIdx, endIdx;

public MyTask(final int startIdx, final int endIdx) {

this.startIdx = startIdx;

this.endIdx = endIdx;

}

@Override

public void run() {

long startTime = System.nanoTime();

for (int i=startIdx; i<=endIdx; i++) {

map.put("sambit:rout:" + i, "C:\\Images\\Provision_Images");

}

long elapsed = System.nanoTime() - startTime;

System.out.printf("task[%,d - %,d], completed in %,d ms%n", startIdx, endIdx, elapsed/1_000_000L);

}

}

}

在处理结束时,此代码通过立即执行

System.gc(),然后执行

Runtime.maxMemory() - Runtime.freeMemory()来计算所使用的内存的近似值。这表明,具有2000万条目的映射大约需要不到2.2 GB的内存,这是相当可观的。我已经使用1个和2个线程运行了它,对于-Xmx和-Xms JVM参数的各种值,以下是生成的输出(仅为清楚起见:2560m = 2.5g):

running with nb entities = 20,000,000, nb tasks = 1, VM args = [-Xms2560m, -Xmx2560m]

task[0 - 19,999,999], completed in 11,781 ms

processing completed in 11,782 ms, usedMemory after GC = 2,379,068,760 bytes

running with nb entities = 20,000,000, nb tasks = 2, VM args = [-Xms2560m, -Xmx2560m]

task[0 - 9,999,999], completed in 8,269 ms

task[10,000,000 - 19,999,999], completed in 12,385 ms

processing completed in 12,386 ms, usedMemory after GC = 2,379,069,480 bytes

running with nb entities = 20,000,000, nb tasks = 1, VM args = [-Xms3g, -Xmx3g]

task[0 - 19,999,999], completed in 12,525 ms

processing completed in 12,527 ms, usedMemory after GC = 2,398,339,944 bytes

running with nb entities = 20,000,000, nb tasks = 2, VM args = [-Xms3g, -Xmx3g]

task[0 - 9,999,999], completed in 12,220 ms

task[10,000,000 - 19,999,999], completed in 12,264 ms

processing completed in 12,265 ms, usedMemory after GC = 2,382,777,776 bytes

running with nb entities = 20,000,000, nb tasks = 1, VM args = [-Xms4g, -Xmx4g]

task[0 - 19,999,999], completed in 7,363 ms

processing completed in 7,364 ms, usedMemory after GC = 2,402,467,040 bytes

running with nb entities = 20,000,000, nb tasks = 2, VM args = [-Xms4g, -Xmx4g]

task[0 - 9,999,999], completed in 5,466 ms

task[10,000,000 - 19,999,999], completed in 5,511 ms

processing completed in 5,512 ms, usedMemory after GC = 2,381,821,576 bytes

running with nb entities = 20,000,000, nb tasks = 1, VM args = [-Xms8g, -Xmx8g]

task[0 - 19,999,999], completed in 7,778 ms

processing completed in 7,779 ms, usedMemory after GC = 2,438,159,312 bytes

running with nb entities = 20,000,000, nb tasks = 2, VM args = [-Xms8g, -Xmx8g]

task[0 - 9,999,999], completed in 5,739 ms

task[10,000,000 - 19,999,999], completed in 5,784 ms

processing completed in 5,785 ms, usedMemory after GC = 2,396,478,680 bytes

这些结果可以总结在以下表格中:

--------------------------------

heap | exec time (ms) for:

size (gb) | 1 thread | 2 threads

--------------------------------

2.5 | 11782 | 12386

3.0 | 12527 | 12265

4.0 | 7364 | 5512

8.0 | 7779 | 5785

--------------------------------

我还观察到,对于2.5g和3g堆大小,由于GC活动,整个处理时间内都有高CPU活动,并且出现了100%的峰值,而对于4g和8g,则仅在最后由于System.gc()调用而观察到。

总之:

1.如果您的堆大小不适当,垃圾回收将破坏您希望获得的任何性能增益。您应该使其足够大,以避免长时间的GC暂停的副作用。

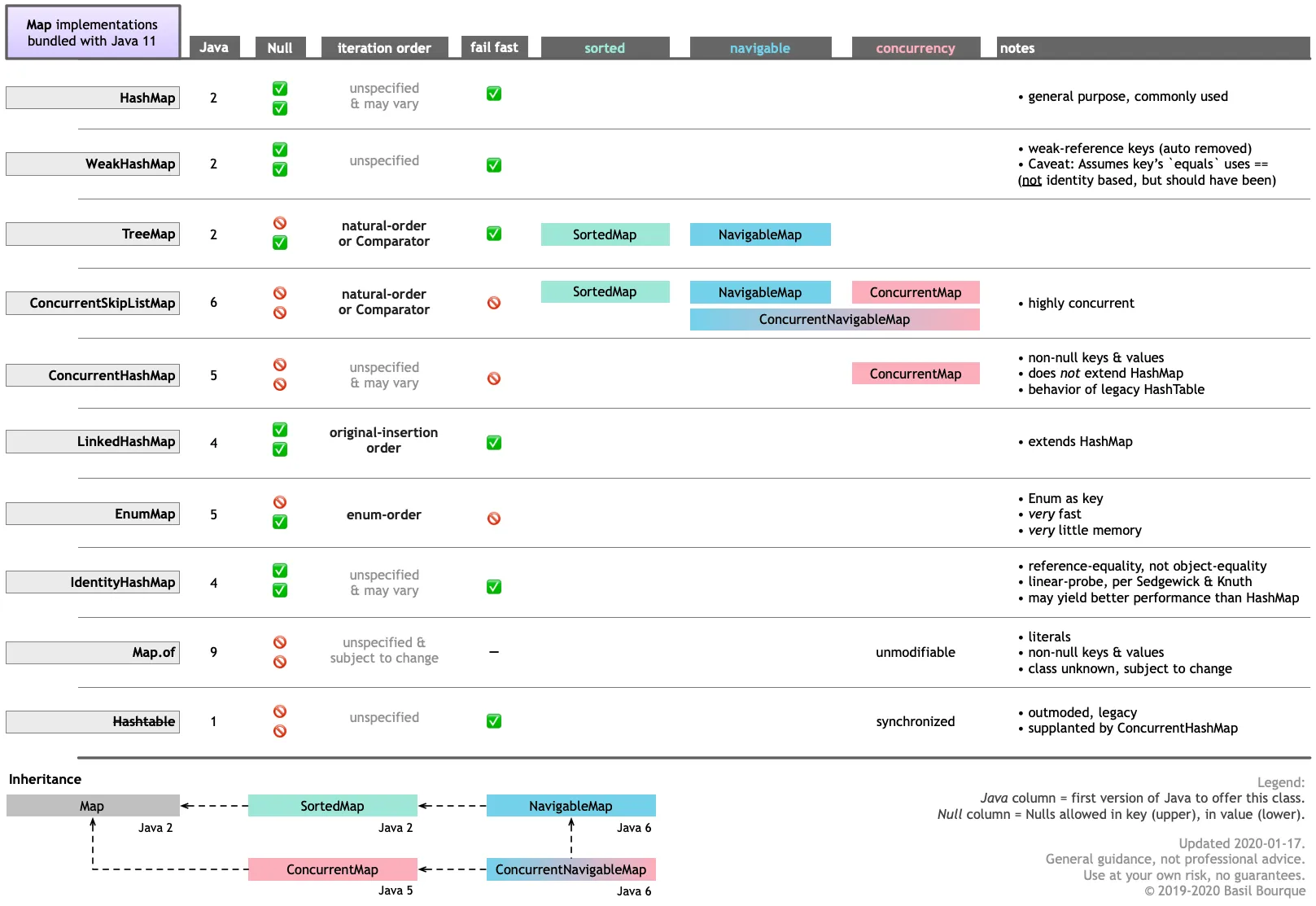

2.您还必须意识到,使用ConcurrentHashMap等并发集合会带来显着的性能开销。为了说明这一点,我略微修改了代码,使每个任务都使用自己的HashMap,然后在结束时将所有映射聚合(使用Map.putAll())到第一个任务的映射中。处理时间降至约3200毫秒。

ConcurrentHashMap,最后一句话又说是HashMap。那到底是哪一个呢? - Eugene